Many lawyers approach AI tools with high expectations, only to be met with vague answers or responses that miss the point. The frustration is understandable. Legal work demands precision, context, and...

The Hong Kong legal profession has entered a new rhythm, and AI is no longer an experiment or a talking point. It is a tool that lawyers are now using every day to deliver sharper, faster work, while maintaining...

As global law firms navigate complex regulatory environments and embrace transformative technologies like generative AI, the ability to harness institutional knowledge while fostering innovation has emerged...

Outsourcing client work has become a common yet rarely discussed practice in the legal industry in the UK. This whitepaper delves into the rising reliance on freelance lawyers and explores how law firms...

The emergence of deepfakes is reshaping the legal landscape, raising critical questions about privacy, security, and regulation. In our latest whitepaper, Rolling in the Deepfakes: Generative AI, Privacy...

Many lawyers approach AI tools with high expectations, only to be met with vague answers or responses that miss the point. The frustration is understandable. Legal work demands precision, context, and disciplined reasoning, yet AI outputs can feel superficial.

After a few such experiences, it is easy to conclude that “AI just doesn’t get the law,” and abandon the tool altogether.

However, unsatisfactory answers may not be a sign that the AI is incapable. More often than not, the disconnect reflects a mismatch between how lawyers naturally ask questions and how AI systems process them. AI relies heavily on how it is prompted, rather than actively inferring context, spotting jurisdictional nuances, or asking clarifying questions. Thus, learning to prompt effectively is the key to turning AI from a source of confusion into a genuinely useful legal assistant. With the right prompting techniques, lawyers can guide AI to deliver clearer reasoning, better-structured analysis, and outputs that align better with real legal workflows.

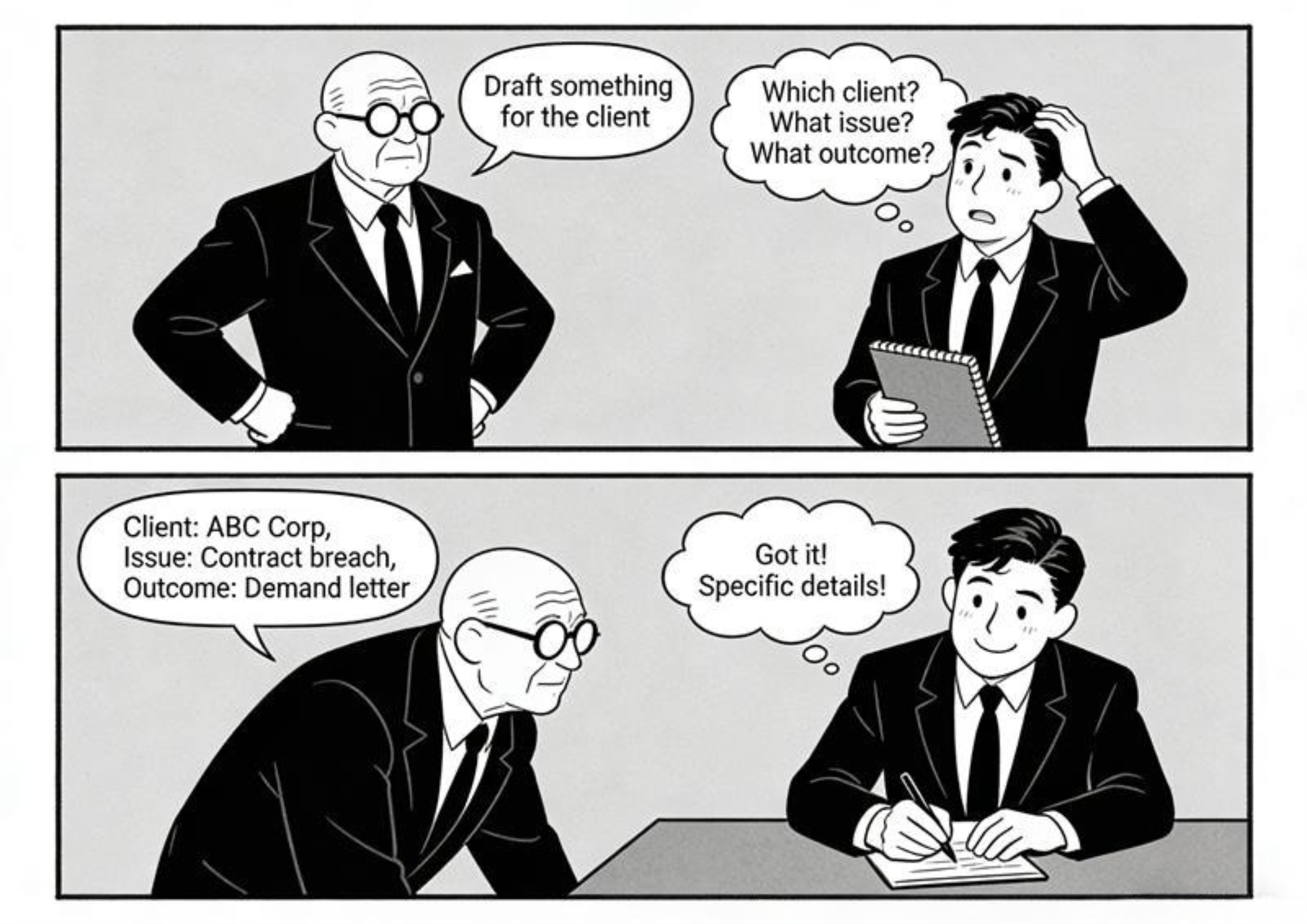

Picture this: You ask a junior to “draft something for the client” without specifying which client, what the issue is, or what outcome you want. It’s difficult to imagine where they would even start with this, not to mention what the resulting draft would look like. This may not be due to incompetence, but rather the fact that the instructions were too vague to anchor their work. Compare that with giving the junior proper background: the client’s identity, the recent hearing, the specific timeline that needs to be summarised, the appropriate tone, and the purpose of the communication. With these details, the junior can produce a far more precise and useful draft.

AI behaves the same. A prompt like “Draft a message to the client” forces the system to guess your intent, often leading to outputs that require substantial rewriting. But when you provide context (jurisdiction, factual background, procedural posture, tone, audience, and desired outcome), the AI becomes a far more reliable and efficient collaborator. Effective legal prompting is not about giving more words, but about giving the right information in the right way, to empower the tool to perform with the clarity and precision you would expect from any well‑briefed team member.

There are 3 overarching principles we suggest you may keep in mind when approaching legal prompting: Clarity of Information, Structure and Organization, and Strategic Thinking Approach.

1. Clarity of Information: Ensuring the prompt is explicit, unambiguous, and context‑rich.

What This Means

- State the legal issue plainly

- Avoid vague phrasing. Specify the exact point of law or factual scenario.

- Define the jurisdiction

- Laws vary Hong Kong vs. UK vs. US will drastically change the answer.

- Be explicit about the task

- Whether you want a summary, analysis, comparison, draft clause, or checklist.

- Provide relevant facts only

- Include material facts, omit irrelevant background that may distract the analysis.

- Specify constraints

- Page/word limits, tone, format, or target audience (e.g., internal memo, client-friendly language).

Examples

- “Summarize the key legal tests for self-defence under Hong Kong criminal law, focusing on HKCFA cases.”

- “Explain whether the doctrine of severance applies to non-compete clauses under Hong Kong employment law.”

- “Draft a client-friendly explanation (about 150 words) of the limitation period for personal injury claims under Cap. 347.”

2. Structure and Organization: Guiding the model to present information in usable, predictable legal formats.

What This Means

- Break down complex queries into components

- E.g., Facts → Issues → Law → Application → Risks → Recommendations.

- Ask for step-by-step reasoning

- Helps ensure logical coherence in legal analysis.

- Assign roles if helpful

- “Act as a Hong Kong criminal law researcher…”

Examples

- “Organize the output into:

- Key statutory provisions

- Leading authorities

- Practical implications for criminal defence practice.”

- “Create a table comparing the remedies available under contract law vs. tort law in Hong Kong.”

3. Strategic Thinking Approach: Using prompts that encourage deeper analysis, foresight, risk assessment, and legal strategy.

What This Means

- Ask for alternative legal arguments

- Defence vs. Prosecution, Plaintiff vs. Defendant perspectives.

- Request identification of risks or gaps

- E.g., evidential weaknesses, procedural hurdles.

- Probe for assumptions or missing facts

- Just like in real legal analysis.

- Seek strategic recommendations

- Next steps, lines of inquiry, potential arguments to run or avoid.

- Prompt for scenario testing

- “If X changes, how does the analysis shift?”

Examples

- “List any unstated assumptions that may affect the conclusion and suggest follow‑up questions a lawyer should ask the client.”

- “What are the chances that a court will grant leave for judicial review on procedural impropriety grounds in this scenario?”

- “How would the analysis change if the injury occurred on private property rather than a public footpath?”

Lawyers who learn to guide AI with precision can unlock genuinely valuable support for research, drafting, and strategic thinking. Oftentimes, the difference between an okay prompt and a good prompt can make a world of difference in the quality of the answers returned.

If you’re interested in going deeper, we invite you to join us for our monthly series on Legal Prompting in the Lexis+ AI Hong Kong Legal Prompting Course and Certificate Program where you can learn more Prompting tips and tricks to elicit better answers from AI tools and get certified in Legal AI.

Subscribe us to receive more updates

Email: marketing.hk@lexisnexis.com

Telephone number:+852 2179-7888